Neural Reconstruction for Robotics and Autonomous Vehicles: The Future of Physical AI Simulation

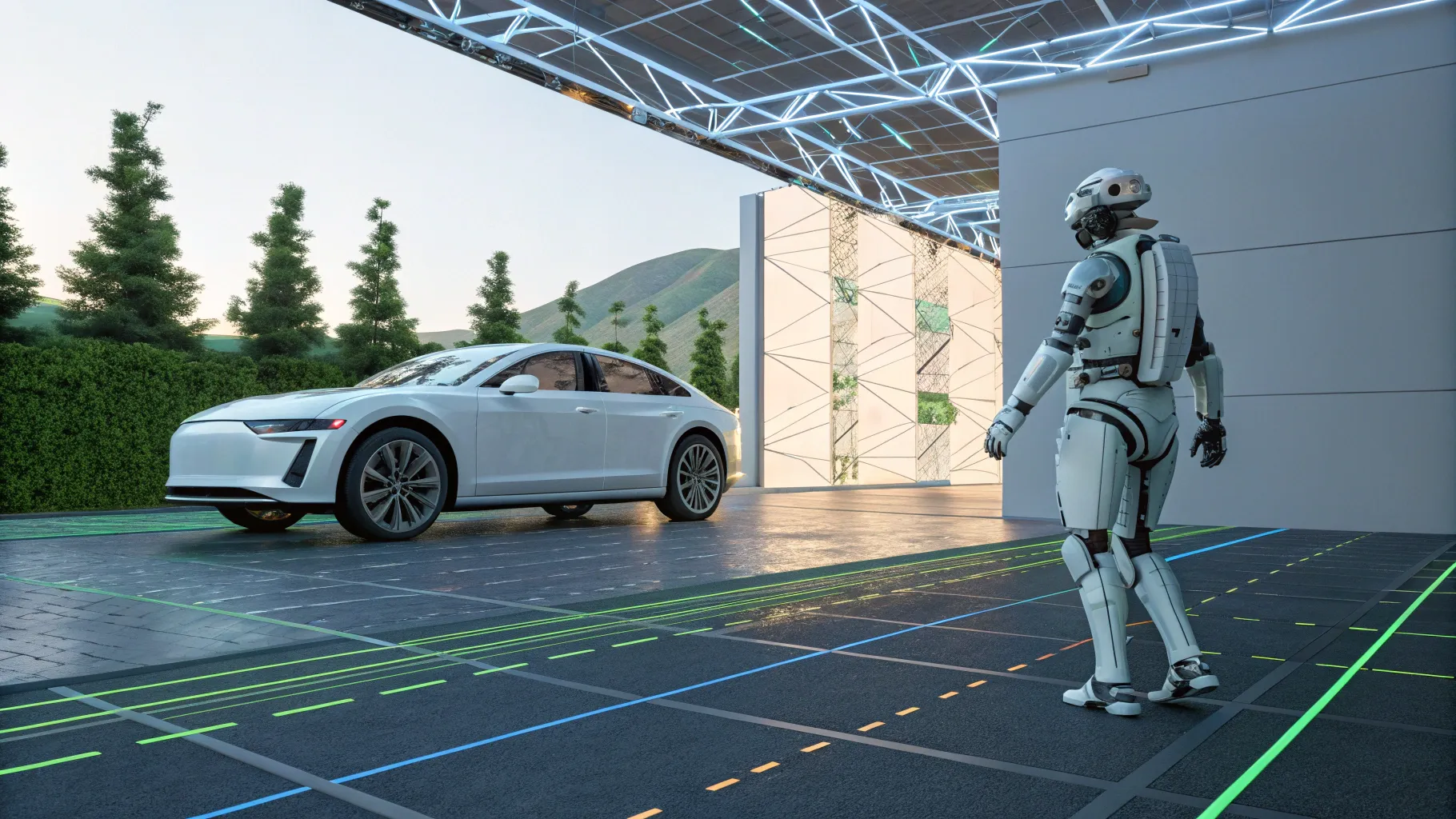

As the world rapidly embraces the era of physical AI, the way we interact with machines, vehicles, and robots is undergoing a profound transformation. Autonomous vehicles are no longer a distant dream, and robots are becoming integral parts of our daily environments—from kitchens and offices to sprawling warehouses. At the heart of this revolution lies the challenge of creating high-fidelity, realistic simulations that can rigorously test and validate these intelligent systems before they navigate the real world.

NVIDIA, a pioneer in AI and simulation technologies, has introduced groundbreaking advancements in neural reconstruction and simulation that are setting new standards for robotics and autonomous vehicle development. Leveraging cutting-edge Gaussian splatting techniques and generative AI, NVIDIA Omniverse NuRec libraries transform raw sensor data into photorealistic 3D scenes. These digital environments form the foundation for testing AI-driven systems with unprecedented accuracy and scale.

In this article, I will walk you through the exciting innovations NVIDIA has brought to the table, explain how neural reconstruction is reshaping simulation, and explore the implications for the next generation of robotics and autonomous vehicles. Whether you’re a robotics enthusiast, a developer, or simply curious about the future of physical AI, this deep dive will provide valuable insights into how simulation and AI are converging to create safer, smarter machines.

🚗 The Era of Physical AI: Transforming Movement and Interaction

We are entering an era where AI is no longer confined to virtual spaces but is actively participating in the physical world. This era of physical AI encompasses autonomous vehicles navigating complex environments, robots assisting in household chores, and intelligent machines streamlining industrial operations.

What makes physical AI particularly fascinating—and challenging—is its need to interact with the real world in real time. Unlike traditional software that operates in controlled digital environments, physical AI must perceive, understand, and respond to dynamic, unpredictable surroundings. This makes the development and testing of these systems uniquely complex.

Simulation is the key to overcoming these challenges. By creating virtual replicas of the real world, developers can safely test AI behaviors under countless scenarios without the risks and costs of real-world trials. However, the quality and realism of these simulations directly impact the reliability of the AI systems being developed.

NVIDIA’s approach leverages neural reconstruction to bring the real world into simulation with stunning photorealism and physical accuracy. This technology is a game-changer for autonomous vehicle testing and robotic operation, allowing AI systems to be trained and validated in environments that closely mimic reality.

🌐 Neural Reconstruction: From Real-World Data to Photorealistic 3D Scenes

One of the fundamental breakthroughs NVIDIA has introduced is the use of neural reconstruction techniques based on Gaussian splatting to convert real-world sensor data into rich, three-dimensional digital environments. This process is powered by the NVIDIA Omniverse NuRec libraries, which can take raw video and sensor inputs and transform them into fully interactive, photorealistic 3D scenes.

Here’s how it works:

- Data Collection: Autonomous vehicle sensors, including cameras, LiDAR, and radar, capture vast amounts of real-world data as the vehicle moves through its environment.

- Neural Reconstruction: Using advanced neural networks, this sensor data is processed into a Gaussian-based 3D representation. Unlike traditional polygonal models, Gaussian splatting allows for more efficient and visually rich reconstructions that capture subtle details and lighting effects.

- Scene Generation: The reconstructed scenes are rendered into photorealistic environments within NVIDIA Omniverse, creating a virtual world that looks and behaves like the real one.

This process enables developers to create highly detailed digital twins of real-world locations. These digital twins serve as testbeds for autonomous vehicles, allowing AI drivers to be evaluated in a wide range of scenarios, including rare and dangerous situations that would be difficult or unsafe to replicate in the physical world.

By simulating these environments with variations—such as different weather conditions, lighting, and traffic patterns—developers can rigorously assess the AI’s ability to generate safe trajectories and make real-time decisions.

🤖 Beyond Roads: Physical AI in Diverse Environments

While autonomous vehicles are a prominent example of physical AI, the technology extends far beyond just driving on roads. Robots are increasingly operating in settings such as kitchens, offices, and warehouses, where they perform tasks ranging from cooking and cleaning to inventory management and logistics.

Each of these use cases demands a unique approach to simulation. The environments are often complex and dynamic, with multiple objects and humans interacting simultaneously. To effectively train and test robots in these scenarios, simulation environments must accurately replicate the physical world’s intricacies.

NVIDIA’s neural reconstruction technology plays a crucial role here as well. By capturing sensor data from these varied environments and reconstructing them into interactive 3D scenes, developers can simulate robot behavior with high fidelity. This enables robots to be tested for safety, efficiency, and adaptability before deployment.

For instance, a warehouse robot can be simulated navigating aisles, avoiding obstacles, and interacting with packages and humans. The simulation can include realistic physics interactions, where the robot’s movements and manipulations are governed by the laws of physics, ensuring that the AI’s decisions are grounded in reality.

⚙️ Real-Time Rendering and Physical Interaction in 3D Gaussian Scenes

One of the standout features of NVIDIA’s approach is the ability to rapidly render these complex 3D Gaussian scenes in real time with high fidelity. This is essential for interactive simulation, where robots or autonomous vehicles can be tested in dynamic environments without delays.

Traditional 3D rendering techniques often struggle with the computational demands of photorealistic environments, particularly when physics-based interactions are involved. NVIDIA’s Gaussian splatting method optimizes rendering by representing scenes with a collection of Gaussian blobs, which are easier to process and render efficiently.

Moreover, the integration of physics into these 3D Gaussian scenes allows for realistic interaction between robots and their virtual surroundings. Robots can manipulate objects, respond to collisions, and adapt their movements based on physical feedback, just as they would in the real world.

This level of realism in simulation is critical for developing AI that performs reliably and safely in physical environments. It ensures that behaviors learned in simulation transfer effectively to real-world applications, reducing the risk of unexpected failures.

🎨 Scaling Simulations with Generative AI

Creating diverse simulation scenarios is vital for training robust AI systems. However, manually designing numerous variations of a scene can be time-consuming and resource-intensive. This is where generative AI comes into play.

NVIDIA’s technology uses generative AI to analyze the visual properties of reconstructed scenes and automatically generate diverse variations. This capability dramatically scales the number of possible test environments derived from a single scene, introducing variations in lighting, object placement, weather, and other factors.

By leveraging generative AI, developers can create comprehensive simulation datasets that expose AI systems to a wide range of conditions and challenges. This diversity helps prevent overfitting to specific scenarios and improves the AI’s generalization to real-world situations.

For example, an autonomous vehicle AI can be tested not only on a single city street but on countless variations of that street with different pedestrian behaviors, vehicle densities, and environmental conditions—all generated from one original scene.

🧠 The Synergy of NuRec and Kosmos: Text-Driven 3D Simulation Generation

Looking towards the future, NVIDIA is pushing the boundaries of simulation with the integration of NuRec and Kosmos AI models. This powerful combination enables the generation of 3D simulation environments from simple text prompts.

Imagine instructing an AI system with a phrase like “busy urban intersection with pedestrians and cyclists on a sunny afternoon,” and having it instantly create a detailed, photorealistic 3D scene that matches that description. This capability significantly lowers the barrier to creating complex simulation environments and accelerates the testing and validation pipeline for robotics and autonomous systems.

Text-driven scene generation allows developers to rapidly prototype and explore a wide variety of scenarios without manual 3D modeling or extensive sensor data collection. It also opens up new possibilities for customizing environments to specific testing needs and edge cases.

By refining testing and validation through this innovative approach, the next generation of robotics and autonomous vehicles can achieve higher levels of safety, reliability, and performance.

🔍 Why High-Fidelity Simulation Matters for Autonomous Systems

High-fidelity simulation is not just a technical luxury—it’s a critical component for the safe and effective deployment of autonomous systems. Here’s why:

- Risk Reduction: Testing AI in virtual environments eliminates the dangers associated with real-world trials, especially in hazardous or complex scenarios.

- Cost Efficiency: Simulation reduces the need for expensive physical prototypes and repeated field testing.

- Scalability: Virtual environments can be rapidly duplicated and varied to cover a vast array of situations, including rare edge cases.

- Accelerated Development: Developers can iterate quickly, tweaking AI algorithms and immediately seeing the impact in simulation.

- Better Generalization: Exposure to diverse simulated conditions helps AI systems perform reliably across different environments and unexpected situations.

NVIDIA’s neural reconstruction and simulation technologies embody these advantages, providing a robust platform that brings the real world into the digital realm with unmatched fidelity and flexibility.

🚀 The Road Ahead: Advancing Physical AI with NVIDIA Omniverse NuRec

The advancements in neural reconstruction and simulation represent just the beginning of a new chapter for physical AI. As these technologies mature, we can expect even more seamless integration between the digital and physical worlds.

Developers will gain tools that enable:

- Instant creation of rich, interactive 3D environments from real-world data or simple text prompts.

- Highly realistic physics-based interactions that mirror real-world dynamics.

- Automated generation of diverse scenario variations to train and validate AI comprehensively.

- Cross-domain applications—from autonomous vehicles to household robots and industrial automation.

By harnessing the power of NVIDIA Omniverse NuRec and the synergy with generative AI models like Kosmos, the next generation of robotics and autonomous vehicles will be safer, smarter, and more adaptable than ever before.

📚 Learn More and Get Involved

If you’re eager to dive deeper into the technology behind neural reconstruction and its impact on robotics and autonomous vehicles, I highly recommend checking out NVIDIA’s detailed technical blog. It provides a comprehensive look at how Omniverse NuRec instantly renders real-world scenes in interactive simulation and the underlying Gaussian splatting techniques.

Visit the blog here: How to Instantly Render Real-World Scenes in Interactive Simulation

Engaging with these resources will give you a front-row seat to the innovations driving the future of physical AI and open up opportunities to contribute to this rapidly evolving field.

Conclusion

Neural reconstruction is revolutionizing how we develop and test physical AI systems by bridging the gap between the real world and simulation. NVIDIA’s pioneering work with Omniverse NuRec, Gaussian splatting, and generative AI is enabling the creation of photorealistic, interactive 3D environments that serve as the ultimate proving grounds for autonomous vehicles and robotics.

From transforming raw sensor data into detailed digital twins to generating diverse, physics-enabled scenarios at scale, these technologies are empowering AI to learn, adapt, and perform reliably in the physical world. The integration of text-driven scene generation further accelerates innovation, making it easier than ever to push the boundaries of what autonomous systems can achieve.

As we continue to explore and expand the capabilities of physical AI, simulation will remain the cornerstone of safe, efficient, and intelligent machine development. The future is here, and it’s being built one neural reconstruction at a time.